AI-Powered Weld Inspection System and NDT Testing in Automotive Manufacturing

Participants

12

End Date

31.12.26

Dataset

dnw1sgyk

Resources2 CPU (8.59 GB) | 1 GPU (22.49 GB)

Compute

0 / 100.00 PF

Submits

0/5

12

31.12.26

Tracebloc is a tool for benchmarking AI models on private data. This Playbook breaks down how a team used tracebloc to benchmark AI models on their welding dataset and discovered which model truly delivered the best results. Find out more on our website or schedule a call with the founder directly.

Every missed defect costs money. Using tracebloc, an automotive manufacturer used AI and machine learning to automate weld quality inspection and NDT testing and uncovered which object detection model truly performs best on their data. The saving potential amounts to over €4 million a year.

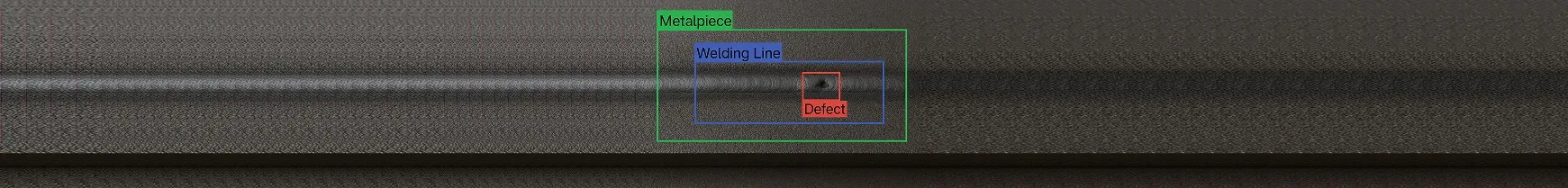

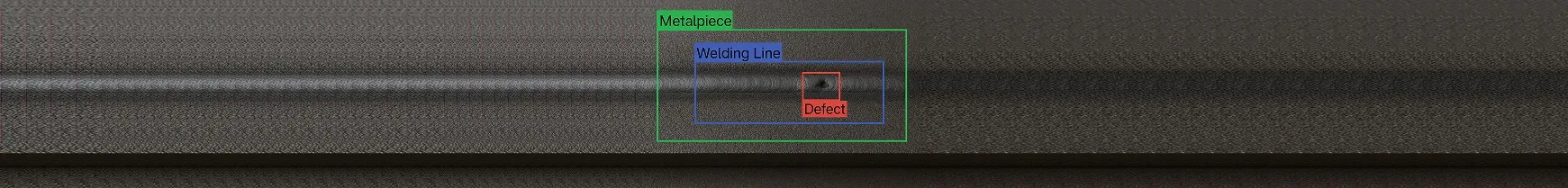

Weld quality is critical in EV manufacturing. Subtle defects can lead to rework, safety risks, or recalls.

Andreas Maier, Head of AI & Automation at a car manufacturer’s main production site, is tasked with improving the non destructive testing weld inspection (NDT) process. His goal is to automate weld quality inspection with AI and machine learning, reduce undetected defects, avoid costly rework, and maintain structural integrity standards in EV battery housings and chassis.

The manufacturer currently relies on rule-based visual NDT quality inspection systems that detect most surface defects but miss subtle internal flaws like micro cracks or porosity. As the car manufacturer scales its EV production, Andreas wants to overcome these limits. The team plans to implement AI-based surface defect detection and machine learning welding models capable of real-time, inline evaluation at full line speed.

The car manufacturer has access to a large labeled internal weld dataset. While building an internal system is feasible, Andreas also wants to tap into the market. He initiates an evaluation of external vendors offering the best pretrained CV weld inspection models.

Each vendor submitted technical and commercial offers:

| VENDOR | MODEL ARCHITECTURE | CLAIMED RECALL / PRECISION | INFERENCE LATENCY (per image) |

| A | DSF-YOLO | 99,1% / 98,3% | 3 ms |

| B | YOLOv8 | 99,3% / 99,0% | 7 ms |

| C | FasterRCNN | 98,8% / 99,2% | 10 ms |

While all vendors claimed high recall, the car manufacturer focused on false negative rates, inference latency, and model robustness to variations in weld types (e.g. spot, seam, laser welds across aluminum and steel).

Using tracebloc, Andreas deployed isolated sandboxes on factory edge devices. Vendors were not given access to raw weld data. Instead, they fine-tuned models on-prem, securely and under supervision, without ever getting access to the data. This approach ensures IP protection and compliance with internal data governance policies.

After baseline testing, vendors were allowed to fine-tune models.

| VEDNOR | CLAIMED RECALL | BASELINE RECALL | RECALL AFTER FINE TUNING |

| A | 99,1% | 94,0% | 96,2% |

| B | 99,3% | 94,5% | 98,3% ✅ |

| C | 98,8% | 93,2% | 97,9% |

The model YOLOv8 by vendor B showed the strongest performance gain after secure fine-tuning on the car manufacturer’s real production data, outperforming other baseline metrics. None of the vendors reached 99%, however, the car manufacturer hopes to further improve the model through additional edge-case data and fine-tuning.

Missed defect costs dominate the total cost of ownership and drive vendor selection:

| STRATEGY | RECALL | MISSED DEFECTS | MISSED COST | EDGE DEVICE AND INITIAL SETUP COST | TOTAL ANNUAL COST |

| Human + rule based | ~95,0% | 300.000 | €9,0m | €0 | €9,0m |

| Vendor A | 96,2% | 228.000 | €6,8m | €1,2m | €8,0m |

| Vendor B ✅ | 98,3% | 102.000 | €3,1m | €1,5m | €4,6m |

| Vendor C | 97,9% | 126.000 | €3,8m | €1,4m | €4,6m |

Vendor B’s YOLOv8 model outperformed DSF-YOLO and FasterRCNN after secure fine-tuning, achieving almost 99% recall at sub-10 ms latency per image on edge. This is not yet up to the required standard, but after more labeling and re-training on edge cases, Andreas hopes to further improve and achieve the desired performance. So the car manufacturer decides to select Vendor B for inline weld inspection at full takt time.

Estimated annual savings: over €4m plus improved product quality & traceability.

Disclaimer:

The persona, figures, performance metrics, and financial assumptions in this case study are fictional and simplified to reflect realistic industry logic. This case is designed to illustrate AI benchmarking and does not reflect actual vendor performance or contractual outcomes.