AI-Powered Breast Cancer Screening and Image Classification for Radiology

Participants

26

End Date

31.12.26

Dataset

dxvssxwk

Resources2 CPU (8.59 GB) | 1 GPU (22.49 GB)

Compute

0 / 50.00 PF

Submits

0/5

26

31.12.26

Tracebloc is a tool for benchmarking AI models on private data. This Playbook breaks down how a team used tracebloc to benchmark AI models on their breast cancer dataset and discovered which model truly delivered the best results. Find out more on our website or schedule a call with the founder directly.

Every missed tumor risks lives and costs money and every false alarm triggers follow-up tests. Using tracebloc, a academic research institute uncovered which object detection model truly performs under pressure, saving over €500k a year compared to the next best approach.

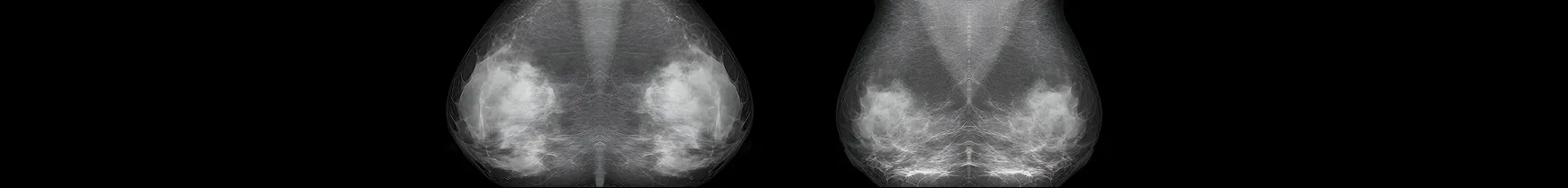

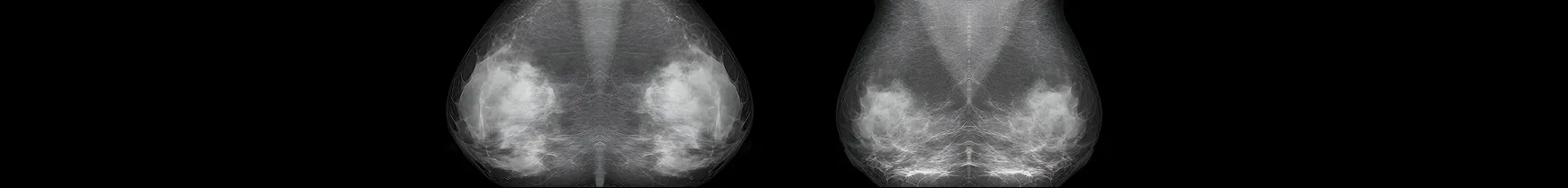

Dr. Elena Fischer, Lead Data Scientist at a major university hospital in Berlin, is tasked with enhancing diagnostic quality in breast cancer screening: Missed tumours lead to delayed treatment and higher mortality, while false positives drive unnecessary biopsies and costs.

Radiologists at the hospital currently achieve 96% sensitivity with ~5% clinically acceptable false positives, but manual review is time-consuming and subject to fatigue. The goal is to provide an AI assistant to support radiologists, especially in the pre-diagnostic phase—helping to prioritize scans and reduce false negatives.

The hospital has access to a valuable internal dataset: 50.000 annotated X-ray scans (malignant/benign). While developing an in-house model remains a potential path, estimated to take 12 to 18 months, Elena decides to first evaluate the current state of the market. By conducting a structured assessment of 3 CE-marked AI vendors, she aims to understand the current state of the art, explore available commercial solutions, and gain a clearer picture of the associated costs, performance, and integration effort. This approach also provides the opportunity to access pretrained models that may generalize better and exhibit less institutional bias than a model trained solely on internal data.

Each vendor submits commercial and technical proposals:

| VENDOR | CLAIMED SENSITIVITY / SPECIFICITY | COST PER IMAGE | INFRASTRUCTURE LOAD |

| A | 95% / 94% | €0,50 | Low |

| B | 96% / 95% | €1,00 | Moderate |

| C | 97% / 96% | €1,80 | High |

Elena and her team prioritize sensitivity due to the high risk of missing early tumours, but they cap the false positive rate at 5% to avoid excessive misdiagnoses.

Using tracebloc, Elena sets up secure sandboxes within the hospital’s IT infrastructure. Vendors do not get access to the raw data. Instead, they are invited to fine-tune their models securely on-prem, ensuring full data protection and regulatory compliance.

After baseline evaluation, vendors fine-tune their models and submit new versions for testing. The results reveal a dramatic difference between claims and real-world performance on the hospitals proprietary data.

| VENDOR | CLAIMED SENSITIVITY | BASELINE SENSITVITY @ 5% FPR | SENSITVITY @ 5% FPR AFTER FINE-TUNING |

| A | 0,95 | 0,87 | 0,89 |

| B | 0,96 | 0,91 | 0,691 |

| C | 0,97 ✅ | 0,92 ✅ | 0,978 ✅ |

Surprise outcome: Vendor A did not match its claimed sensitivity. Vendor B held its promise but was outperformed by Vendor C who significantly improved after secure fine-tuning.

| APPROCH | ESTIMATED SENSITIVITY @ 5% FPR | MISSED CANCERS | COST DUE TI MISSED CANCERS | AI COST | TOTAL COST |

| Human Only | ~0,96 | 10 | €1.000.000 | €0 | €1.000.000 |

| Vendor A | 0,890 | 28 | €2.800.000 | €25.000 | €2.825.000 |

| Vendor B | 0,961 | 10 | €1.000.000 | €90.000 | €1.090.000 |

| Vendor C | 0,978 | ~6 | €600.000 | €50.000 | €650.000 |

False positive costs (~€5M/year) are held constant across all approaches (5% FPR), so focus can be set solely on the impact of missed cancers.

After secure on-prem fine-tuning, Vendor C’s model achieved the highest sensitivity (0.978). The best-performing setup emerged from a hybrid strategy, where the AI model pre-sorts scans and flags high-risk cases for radiologists to review. This setup reduces diagnostic errors by over 60%,improves efficiency, and maintains full clinical oversight—radiologists stay in control at every step.

The hospital selects the Human + AI configuration, which delivers both strong medical outcomes and a compelling business case:

| STRATEGY | ESTIMATED SENSITIVITY | MISSED CANCERS | COST DUE TO MISSED CANCERS | TOTAL ANNUAL COST |

| Human Only | ~0.960 | 10 | €1,000,000 | €1,000,000 |

| Human + Vendor C | ~0.985 | ~4 | €400,000 | €450,000 ✅ |

The hospital proceeds with a 3-month pilot, including:

Disclaimer:

The persona, figures, performance metrics, and cost calculations in this case study are illustrative and based on fictionalized inputs designed to mimic real-world scenarios. They are intentionally kept at a high level to make the concepts easier to understand and communicate. These do not represent actual clinical results, vendor performance, or contractual terms, and are intended solely for strategic discussion and conceptual exploration.